After first getting involved with Textual, back in 2022, I became acquainted with MkDocs/mkdocs-material. It was being used for the (then still being written) Textual docs, and I really liked how they looked.

Eventually I adopted this combination for many of my own projects, and even adopted it for the replacement for davep.org. It's turned into one of those tools that I heavily rely on, but seldom actually interact with. It's just there, it does its job and it does it really well.

Recently though, while working on OldNews, something changed. When I was working on or building the docs, I saw this:

This was... new. So I visited the link and I was, if I'm honest, none the wiser. I've read it a few times since, and done a little bit of searching, and I still really don't understand what the heck is going on or why a tool I'm using is telling me not to use itself but to use a different tool. Like, really, why would MkDocs tell me not to use MkDocs but to use a different tool? Or was it actually MkDocs telling me this?1

Really, I don't get it.

Anyway, I decided to wave it away as some FOSS drama2, muse about it for a moment, and carry on and worry about it later. However, I did toot about my confusion and the ever-helpful Timothée gave a couple of pointers. He mentioned that Zensical was a drop-in replacement here.

Again, not having paid too much attention to what the heck was going on, I filed away this bit of advice and promised myself I'd come back to this at some point soon.

Fast-forward to yesterday and, having bumped into another little bit of FOSS drama, I was reminded that I should go back and look at Zensical (because I was reminded that there's always the chance your "supply chain" can let you down). Given that BlogMore is the thing I'm actively messing with at the moment, and given the documentation is in a state of flux, I thought I'd drop the "drop-in" into that project.

The result was, more

or less, that it was a drop-in replacement. I did have to change

$(mkdocs) serve --livereload to drop the --livereload switch (Zensical

doesn't accept it, and I'd only added it to MkDocs recently because it

seemed to stop doing that by default), but other than that... tool name swap

and that's it.

Testing locally the resulting site -- while themed slightly differently (and I do like how it looks) -- worked just as it did before; which is exactly what I was wanting to see.

There was one wrinkle though: when it came to publishing to GitHub pages:

uv run zensical gh-deploy

Usage: zensical [OPTIONS] COMMAND [ARGS]...

Try 'zensical --help' for help.

Error: No such command 'gh-deploy'.

Oh! No support for deploying to the gh-pages branch, like MkDocs has! For

a moment this felt like a bit of a show-stopper; but then I remembered that

MkDocs' publishing command simply

makes use of ghp-import and so I

swapped to that and I

was all good.

So, yeah, so far... I think it's fair to say that Zensical has been a drop-in replacement for the MkDocs/mkdocs-material combo. Moreover, if the impending problems are as bad as the blog post in the warning suggests, I'm grateful to this effort; in the long run this is going to save a lot of faffing around.

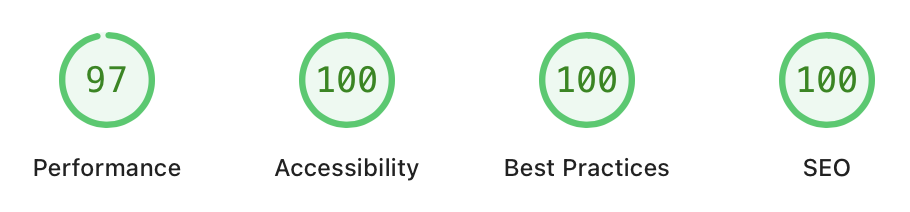

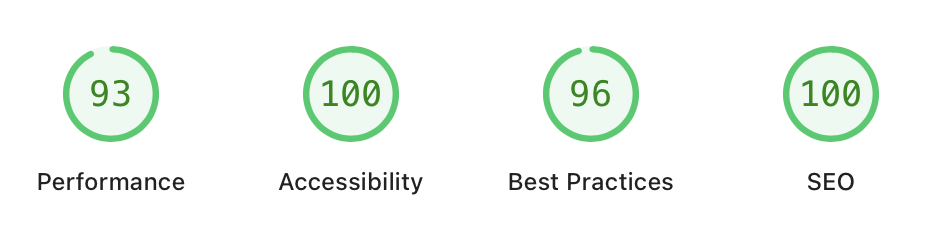

The next test will be to try the docs for something like Hike. They're a little bit more involved, with SVG-based screenshots being generated at build time, etc. If that just works out of the box too, without incident, I think I'm all sorted.